Kon-Tiki tells the true story of explorer Thor Heyerdahl and

his crew who crossed the Pacific Ocean on a balsa wood raft in 1947.

Directed by Joachim Rønning and Espen Sandberg, the film was a Norwegian

production with water sim and creature effects crafted by five

Scandinavian VFX studios: Fido, Gimpville, Important Looking Pirates,

Storm Studios and Blink Studios.

Kon-Tiki was filmed in several countries, on the open ocean and in a water tank in Malta. “The vendor VFX supervisors were responsible for shooting references and data acquisition,” explains Kaupang. “Chrome/grey ball references were used, but a lot of the plates were also matched manually in post. A Sony HXR-MC1P was used as a witness camera throughout the period we shot in the water tank, and its timecode was synced to the master time code, and footage could be used for tracking purposes where needed. Metadata from the ARRI Alexa cameras (with LDS) was also available, and lens data for each set-up. 3V-5V LED diodes were used as tracking markers when shooting the storm sequence in the tank at night.”

Realistic water sims were crucial to the visual effects in the film, as were the various sea creatures. “My number one concern regarding the CG animals was weight,” says Kaupang. “Weight, weight, weight…I could not stress this enough and was pretty adamant about it. Renders, textures and overall design may look fantastic and super realistic, but if the weight is off in the animation and the animal moves too fast, feels too light or too heavy, the audience can tell immediately. This is something we humans are so used to see every day, that we can tell in an instant if it is off.”

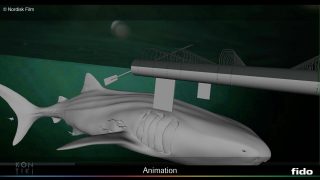

“The whale shark sequence done by Fido was also a key sequence in the movie, and it had to feel large, majestic and massive in its movements,” adds Kaupang. I think Fido pulled it off great and the audience loved it as well. Fido also did the bioluminescent creatures, the crab and all the CG fish under the raft. In a couple of shots they worked closely together with ILP, where Fido did the fish and ILP did the sharks – in the same shots. The only live animal in the movie was the parrot Lorita, but even that one was CG in one sequence. All the other animals you see in the movie are all CG animated.”

Interestingly, two versions of Kon-Tiki were made – one in Norwegian and one in English for the international market. “We shot all dialogue shots of the actors twice in the different languages,” says Kaupang. “This meant we also had to redo around 100 VFX shots for the English version, due to the different sets of plates.”

FIDO’s approach to ocean extensions was to keep the live action water interacting with the raft as much as possible, supplemented with extra splashes and CG water created with Houdini and V-Ray. “We had to do some up front RND to our pipe to get V-Ray to talk to Houdini,” says Lindahl. “This work was led by lighting TD Johan Gabrielsson and FX artist Björn Henriksson. We used the vector displacement within V-Ray to create the main water surface. The vector displacement texture was then baked and sent to Houdini where the wakes, splashes and foam were created. We then passed those water elements back from Houdini as textures and V-Ray proxies and rendered it out with V-Ray.”

“Seaming the practical and CG water together was one of my biggest worries ahead of starting this project,” adds Lindahl. “I must admit though, I was very impressed how the team at FIDO handled it. We basically started of by matching the sun position to the plate. We then tweaked our displacement textures to match the choppiness of the live action water. The rest was just plain old elbow grease compositing, creating animated soft mattes for the seam between the two elements.”

For the signature whale shark shots, FIDO could rely on the actual fish encounter from the real journey. “We learned early on in the project that the whale shark sequence was pinnacle to the directors Joachim Rønning and Espen Sandberg and producer Aage Aaberge,” notes Lindahl. “It relates back to the Oscar winning documentary that was made during the actual voyage. The crew captured the encounter with a whale shark on film. The whale shark also features at the Kon-Tiki museum in Oslo. So we knew that the pressure was on to make this sequence seamless.”

The visual effects team looked also to YouTube and nature doco reference, sculpting in ZBrush, texturing in MARI, rigging and animating in Maya, rendering in V-Ray and compositing in Nuke. The team played with the creature’s size somewhat for the final shots. “The largest whale shark that has ever been found is 12.5 meters,” says Lindahl. “We started out at that size, but it soon became clear that we had to stretch reality a bit to make the creature look menacing enough. Because of the refractions of the water, the size looked quite different from shot to shot. So we had to make sure that we had a scalable rig to be able to tweak the size from shot to shot. In the shot where the whale shark swims under the raft, we had it at 25 meters in length.”

In a sequence based on the documented passage from the actual voyage in 1947, the crew spot a group of mysterious self-illuminating creatures. “We got free rein when it came to the design of the creatures,” recalls Lindahl. “It was important to the directors that it had some kind of grounding in reality, but other than that it was very much up to us to come up with a solution. We found a little deep-sea creature called ‘Tomopteris helgolandica’, which was only a couple of centimeters in length in reality. Our head modeler Magnus Eriksson did a great job at capturing the feel of this little creature and transformed it in to a five meter glowing beast. Senior animator Staffan Linder analyzed the movements and re-created a scaled up version of its fluid motion.”

FIDO also created various fish for their sequences, modeling in ZBrush and texturing in Maya. Says Lindahl: “We created a bespoke flocking system in Houdini, so that the animators only had to animate a leader for each shoal and the rest of the fish would follow in an intelligent way.” That kind of ingenuity was a key part of the production, and Lindahl adds that the film “was a fantastic experience working on a, by Scandinavian measures, huge production. It was produced on a budget that is close to 10% of what it would have been if it were a ‘Hollywood production’. Still, we managed to produce results that look a lot more expensive than they actually are.”

In one two minute (4,000 frames) visual effects shot, the audience witnesses a trip from day 51 to 101 of the expedition, that travels from the planet surface into space. “On this shot a lot of time was spent on the animatic,” says Gimpville visual effects supervisor Lars Erik Hansen, who also served as the overall effects supe and consultant in the film’s pre-production stage. “This scene was of high emotional importance for the story and it took a lot of tweaking to get the camera animation and timing approved. The camera was tracked in SynthEyes and modified in Maya, while the rest is Houdini only.”

The camera travels through CG clouds created by Gimpville. “We used Houdini’s volumetric CVEX shaders to create high-res clouds at render time, while using the same underlying shader setup to displace and display a volumetric proxy of the clouds in the viewport,” explains Gimpville Houdini artist Ole Geir Eidsheim. “We did have some stability issues since the whole scene was made in scale, and the early versions of the new Houdini 12 OGL viewport did not like that very much.”

“The ocean displacement was a shader with multiple Houdini Ocean Toolkit assets masked with fractal noises to avoid tiling patterns,” adds Eidsheim. “The outer blue atmosphere was added as a comp effect at a later stage to allow fine control according to reference images. Render times were definitely a big issue with so many frames. Since everything was set up to scale and so many elements was to be rendered with raytracing, there was a thin line between volume sampling and displacement quality in order to keep below the needed two hour limit per frame.”

The space background was an augmented image originally acquired from French photographer and reporter Serge Brunier, a noted specialist in depictions of astronomical subjects. “In the end, says Hansen, “the only thing not being 3D is the crew and some of the raft and the matte paint, and it took one guy (Christian Korhonen) three months to complete the 3D for the entire sequence.” Lead compositor Jan Svalland oversaw final comp’ing in Nuke with the sun lens flare created in After Effects with the Optical Flares plugin.

Gimpville also contributed CG ocean sims for a storm sequence outside the coast of South America. “We used the Houdini Ocean Toolkit originally released by Drew Whitehouse for the ocean displacement and rendering,” says Eidsheim. “A hallmark of HOT is its tiling nature (because the displacement effect is limited to a limited sized area), so we had to construct shaders with procedural noise textures that blended between 5-6 different HOT displacement with different values and seeds to get a natural looking ocean without visible tiling. Thanks to the flexible nature of Houdini’s VOP node toolkit, we set up an ocean displacement shader and made a geometry deformer that mirrors the settings of the ocean shader, thus be able to get visual feedback on how the surface would look at render time, while also be able to use it as a base for particle simulations. This way we could have a high-resolution grid displaced at render time, and a more manageable proxy to work on while doing simulations.”

Gimpville would set up a general scene that was about 200m by 200m, then rigged floating camera rigs and full particle systems. “We then attached tracked cameras to the floaters or manually placed cameras around in our scene to capture the best angles for a particular shot,” says Eidsheim. “While using this general scene worked fine for most of the shots, some proved to be particularly difficult due to either waves not behaving throughout the shot or secondary cached elements appearing wrong in camera. For these case we had to customize and create new scenes to make the effects work.”

Particles were a big part of the storm and for those, artists relied on data coming from HOT combined with custom geometry operations to define areas where particles would emit. “We also used the 3D volumetric tools to create and displace puffs of clouds flying through the air, simulating mist and fog whipped up by the wind,” says Eidsheim.

For the interaction between the raft and the ocean, Gimpville used a scale model of the raft and created geometry at the intersection point between the raft and the ocean proxy geometry. Explains Eidsheim: “This new geometry was then modified and fitted with attributes that allowed us to simulate splashes and foaming water around the raft while the waves hit it. In some of the shots, more complex splashes was needed in in the front, and for this we set up a FLIP fluid simulation where the raft crashed into water, or water crashed into the model. This was then further enhanced with spawning particles and meshed to give it a more water-y look than what you get by just using particles. For the lighting, we set up lightning lights that matched the sky plates and rendered these using light exports and AOVs so that comp could tweak and adjust the look, action and intensity post render.”

In another Gimpville sequence, the raft is surrounded by a pod of blue whales, signifying it has become one with nature. For the breaching whale water effects, the studio considered using Naiad, but SideFX had at the time improved its FLIP toolset in release V12. “We set up high-res flip simulations around each whale and surfaced this with Houdini’s Particle Fluid Surface SOP,” explains Eidsheim. “To get the simulated polygonal surface to blend with the rest of the ocean for perfect displacement rendering, we had to set up a system that straighten and flatten the outer areas of the surface since the Particle Fluid Cache has a hard time surfacing particles perfectly flat even if there is no movement in the particles or there is a very dense particle simulation.”

“We used COPs to map a black and white feathered texture based on the outer bounds of the actual surface geometry,” adds Eidsheim. “This texture was then combined with data measuring surface displacement and velocity in the shader and in SOP VOPs to act as a multiplier for the amount of ocean surface displacement. This way we was able to straighten out and control the outer edges of the simulated patch, seamlessly integrating the ripples around the whales with the rest of the ocean plane. On top of the surfaced particles we emit particles based on the contact between the whale and the surface and curvature and speed of the surface. We then transfer a procedural noise velocity texture to the particle source. The particle motion is primarily driven by initial velocity and the velocity field from the FLIP sim.”

Environments were also a feature of Gimpville’s Kon-Tiki contributions – they handled the plane flying through the clouds, the aircraft carrier and the harbor shots. “The carrier was modeled and textured in 3ds Max and Maya,” says Hansen, “while we used our in-house motion capture suite to animate the crowd with animation tweaking in Motion Builder and Softimage. All the elements was then imported to Houdini for simulation of flags, procedural animation of WW2 aircraft on deck, shading and rendering. The entire shot, including the ocean, is CG. The biggest challenge was to add enough detail to the aircraft carrier. It was hard to sell the scale of the model until we had enough geometry.”

For the Callao Naval Base shots, assets were again modeled in Maya and 3ds Max and imported into Houdini. “In Houdini, we simulated all the flags and banners using Houdini’s dynamic simulation tools and the Spring Surface Operator and made custom shaders for all the elements before rendering it as 16 bit EXR with light export passes using Physically Based Shading in Mantra,” outlines Hansen.

Shots of a Douglas DC-3 on its approach to Lima, Peru were previs’d, and then satellite images and DEM data online used to research the backgrounds required. “Eventually we bought a decent data set with 15 m resolution of an area north of Lima,” says Hansen. “We rendered the data sets in Mantra and stitched the renderings into a high-res panorama in Photoshop and used this as a base for further matte painting. The panorama and 3d camera from Maya was brought into Nuke and comped with the renderings of the airplane and clouds. After a lot of trial and error we finally found some aerial photos which matched the perspective of our render / matte painting. These images turned out to be a life saver for this particular shot.” The plane itself was a model purchased on Turbosquid.com, added to by Gimpville and shaded in Houdini.

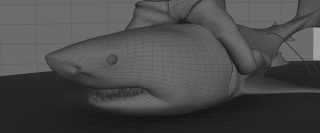

In one shot, the crew’s pet parrot Lorita takes to the water and is attacked by the sharks. ILP made a CG replica of Lorita based on reference photography, sculpting the parrot in Maya and Mudbox and adding feathers and fur. The sharks were also created directly from reference in Maya, Mudbox and ZBrush. A close-up shark for hero shots of it being killed on the raft included extra detail (the principal photography relied on a shark dummy). The sharks were designed with several scars and teared fins “which showed that these beasts have lived a rough life,” says Kaupang.

“ILP did an awesome job on the infamous white shark sequences,” says Kaupang. “They were very hard to do. When you pull a shark on to land, it loses a lot of its mobility and range of motion. Especially a large shark. We studied hours and hours of reference footage from Discovery and National Geographic, but had to exaggerate the movements in the animation quite a lot to make the shots look menacing and entertaining. But we had to still keep it plausible and within what feels and is perceived realistic.”

The shots were filmed on the deck using witness cameras to help later with tracking. “Digi-doubles were created and match moved to the actors, and were used for casting shadows and reflections onto the digital raft floor and digital sharks. HDR images were captured on-set for CG lighting purposes and we shot reference images of chrome/gray spheres, used to properly orient and calibrate the HDR dome lighting/reflection images for each shot.”

To help sell the interaction, Kaupang suggested the actor pull back one of the shark’s fins with his leg when he sat down on its back. “It worked great!,” notes Kaupang. “In addition the raft’s floor was recreated in CG so we could feel the impact and weight of the shark bouncing around. CG debris was added on the floor and a CG cardboard box and tin can was added for even more interaction. All in all a lot of subtle details that really helped sell the shots, which took a lot of time and skill by talented artists to get right.”

ILP used Naiad for the water sims required in the shots, which were rendererd in V-Ray. “This allows us to get really quick results and feedback during the look development process,” says ILP’s Jacobson. “The base lighting was V-Ray domelight with a HDRI image that we shot on-set. We did use some extra keylights in order to get nicer kicks in the specularity and also to gain better control of the shadows that where cast from the shark on to the live action surrounding. The base shader for the shark was a vray-fast SSS shader which we layered together with a separate wetness shader in order to control the specularities and reflections. It varied over time since the shark was more wet just as they caught it, and it slowly dryed up over the following shots.”

“The rigging of the shark was all done in Maya,” adds Jacobson. “It was a fairly basic rig with additional blendshapes to control certain shapes of the shark. We did not choose to use any muscle system since we had relativity few shots. We thought we got better control and quicker results to brute force the animation on those scenes with softbody and some basic deformers. Another challenge was the floor of the raft under the shark. In order to convey the weight of the shark we had to replace the live action floor with a 3d floor in order catch the deformation. We had to carefully rotoscope our actors and do a lot of clean up work in order to remove the shadows from the actors onto the plate. We did matchmove work on the actors in order to re-construct their shadows cast onto the floor and shark. The scene with the actor sitting on top of the shark was also a very complex interaction shot. Since the rubber shark was very soft, the actors hands got buried deep down into the body of the shark. We needed to re-construct his hands in comp. In order to get fully visible hands during the entire action. Another challenge with this shot was that the rubber shark was smaller than the digital shark. We had to create a digital floor and lower it in order to fit our digital shark into the shot.”

ILP’s proprietary rendering engine, Tempest, was used for rendering particles and volumetric effects, including the blood. Compositing was carried out in Nuke.

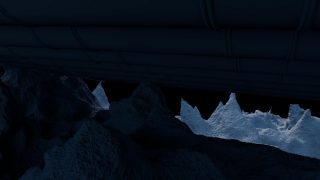

The reef area was one of Storm’s most challenging set of shots, especially where the crew release the anchor. “For most of the above water shots we divided the water surface into patches, typically a patch inside the reef and one outside the reef,” explains Storm Studios visual effects supervisor Hege Berg. “The joints of these patches were usually hid under splashes or turbulent water. Some patches ended up being rather heavy Naiad simulations due to the area being close to camera and containing churning water, while others could do with a fairly simple displaced surface with just some extra splashes and foam added.”

Storm generated simpler water surfaces with the Houdini Ocean Toolkit. “If the shot required any additional interactions say between the water surface and the raft these would typically be simulated in Naiad and then integrated in comp,” says Berg. “All our water was rendered via Mantra using a shader written by our lead lighting TD.”

The studio also conducted an extensive practical shoot of foam and splashes at 4K in high speed. “Though some of it was used the problem we had with the element water was that we just could not get enough bulk,” admits Hege. “The big splashes that are generated from the vast forces of the ocean are almost opaque and with the elements we had shot we just could not get the right look despite extensive layering. We decided to generate a library of CG splashes instead for which we used Naiad to generate the sims and Mantra to render.”

“The compositors would thus map foam on top of the Houdini generated ocean surface and add splashes from our computer generated splash library,” adds Berg. “The foam was in some instances just stills of foam but for some of the shots it was necessary that the foam pattern interacted with the raft and moved in which case it we ended up generating a naiad sim for which we only rendered out the foam particles from a top camera.”

For one particular side-on shot of the raft on a large wave just before it hits the reef, Storm previs’d the scene first in Maya since no specific live action element had been filmed. “We were able to locate an element of the Kon-Tiki raft shot side on laying relatively still on a calm ocean so we stuck this on a card for our previs,” explains Berg. “Once we had a layout that directors were happy with we started work on creating the main wave using our ocean toolkit. Comp would take the work in progress renders of the wave and animate the raft. Thus the shot went back and fro between comp and fx a couple of times before we managed to get a wave-raft animation that utilized the little rocking motions of the raft plate.”

That gave Storm the main wave but they still needed the interactions of the bow plowing into the water. “We achieved this by setting up a Naiad sim on a flat ocean where we matched the speed of the raft to generate a more detailed ocean surface round the bow of the raft,” says Berg. “This sim was then subsequently deformed with the ocean toolkit to match the Houdini ocean surface. The foamy wake was a top down render of Naiad generated particles which the compositors used as a texture sequence to project onto the ocean using the UV coordinates of the ocean. They also added patches of drifting foam which was actually only still images of real foam shot by Aker Peer and Aker River.”

Using a library of Naiad splash sims rendered from five different camera angles, Storm added splashes around the raft and reef. “For the churning water around the reef we reused the Naiad sims we had generated for the close up shots of churning water only rendered with a camera matching the angle of this shot. The final integration of the splashes, churning water by the reef, calm inner water and the big outer wave was done in comp.”

The inner part of the reef was a textured plane that matched real coral and seaweed banks, with a calmer turquoise ocean surface added inside. “As a final touch,” notes Berg, “bespoke sims were done for close up splashes (the idea being that they would be generated from the camera boat) and droplets being left behind on the lens.”

An underwater shot of the raft on rocks relied on the water surface being split in two – a foreground patch that interacts and a churning background patch. “We did a Naiad simulation for the interaction but we were able to reuse one of our pre-existing naiad sim for the background patch,” says Berg. “The turbulent underwater elements, such as the debris, bubbles and smoke volumetric was all simulated in Houdini. Once we got the look the directors wanted the simulations themselves were fairly straightforward, we took advantage of the side on view and thus could simulate the particles in layers and comp used the zdepth of the reef to mask them out appropriately. The reef was sculpted in Zbrush and along with fx and the raft itself then rendered via Mantra.”

Storm’s New York cityscapes were mostly matte paintings rendered in Photoshop and comp’d in Nuke. “For the CG traffic we had some cars in plate that we could match to,” says Berg. “They were modelled and animated in Maya, and shaded and rendered with RenderMan. For this shot the client also asked for a large amount of atmospheric chimney smoke to illustrate that this was a cold winter day. At first we went out in Oslo with our own DSLR cameras to film chimney smoke around in the city, but at the end we realized that we couldn’t get the perspective and scaling right for all the chimneys, so we ended up rendering in Maya via RenderMan.”

Visualizing Kon-Tiki

“It is such a fantastic story it is hard to believe it is all true,” says production visual effects and animation supervisor Arne Kaupang. “The main character Thor Heyerdahl is a national hero and an icon here in Norway, and his crew actually encountered storms, huge whale sharks, strange bioluminescent creatures and pulled sharks onboard the raft.”Kon-Tiki was filmed in several countries, on the open ocean and in a water tank in Malta. “The vendor VFX supervisors were responsible for shooting references and data acquisition,” explains Kaupang. “Chrome/grey ball references were used, but a lot of the plates were also matched manually in post. A Sony HXR-MC1P was used as a witness camera throughout the period we shot in the water tank, and its timecode was synced to the master time code, and footage could be used for tracking purposes where needed. Metadata from the ARRI Alexa cameras (with LDS) was also available, and lens data for each set-up. 3V-5V LED diodes were used as tracking markers when shooting the storm sequence in the tank at night.”

Realistic water sims were crucial to the visual effects in the film, as were the various sea creatures. “My number one concern regarding the CG animals was weight,” says Kaupang. “Weight, weight, weight…I could not stress this enough and was pretty adamant about it. Renders, textures and overall design may look fantastic and super realistic, but if the weight is off in the animation and the animal moves too fast, feels too light or too heavy, the audience can tell immediately. This is something we humans are so used to see every day, that we can tell in an instant if it is off.”

“The whale shark sequence done by Fido was also a key sequence in the movie, and it had to feel large, majestic and massive in its movements,” adds Kaupang. I think Fido pulled it off great and the audience loved it as well. Fido also did the bioluminescent creatures, the crab and all the CG fish under the raft. In a couple of shots they worked closely together with ILP, where Fido did the fish and ILP did the sharks – in the same shots. The only live animal in the movie was the parrot Lorita, but even that one was CG in one sequence. All the other animals you see in the movie are all CG animated.”

Interestingly, two versions of Kon-Tiki were made – one in Norwegian and one in English for the international market. “We shot all dialogue shots of the actors twice in the different languages,” says Kaupang. “This meant we also had to redo around 100 VFX shots for the English version, due to the different sets of plates.”

Fido has a whale of a time

Led by visual effects supervisor Mattias Lindahl, Swedish house FIDO completed several sequences including the crew’s encounter with a whale shark, CG ocean extensions and various CG fish and sea creatures.FIDO’s approach to ocean extensions was to keep the live action water interacting with the raft as much as possible, supplemented with extra splashes and CG water created with Houdini and V-Ray. “We had to do some up front RND to our pipe to get V-Ray to talk to Houdini,” says Lindahl. “This work was led by lighting TD Johan Gabrielsson and FX artist Björn Henriksson. We used the vector displacement within V-Ray to create the main water surface. The vector displacement texture was then baked and sent to Houdini where the wakes, splashes and foam were created. We then passed those water elements back from Houdini as textures and V-Ray proxies and rendered it out with V-Ray.”

“Seaming the practical and CG water together was one of my biggest worries ahead of starting this project,” adds Lindahl. “I must admit though, I was very impressed how the team at FIDO handled it. We basically started of by matching the sun position to the plate. We then tweaked our displacement textures to match the choppiness of the live action water. The rest was just plain old elbow grease compositing, creating animated soft mattes for the seam between the two elements.”

For the signature whale shark shots, FIDO could rely on the actual fish encounter from the real journey. “We learned early on in the project that the whale shark sequence was pinnacle to the directors Joachim Rønning and Espen Sandberg and producer Aage Aaberge,” notes Lindahl. “It relates back to the Oscar winning documentary that was made during the actual voyage. The crew captured the encounter with a whale shark on film. The whale shark also features at the Kon-Tiki museum in Oslo. So we knew that the pressure was on to make this sequence seamless.”

The visual effects team looked also to YouTube and nature doco reference, sculpting in ZBrush, texturing in MARI, rigging and animating in Maya, rendering in V-Ray and compositing in Nuke. The team played with the creature’s size somewhat for the final shots. “The largest whale shark that has ever been found is 12.5 meters,” says Lindahl. “We started out at that size, but it soon became clear that we had to stretch reality a bit to make the creature look menacing enough. Because of the refractions of the water, the size looked quite different from shot to shot. So we had to make sure that we had a scalable rig to be able to tweak the size from shot to shot. In the shot where the whale shark swims under the raft, we had it at 25 meters in length.”

In a sequence based on the documented passage from the actual voyage in 1947, the crew spot a group of mysterious self-illuminating creatures. “We got free rein when it came to the design of the creatures,” recalls Lindahl. “It was important to the directors that it had some kind of grounding in reality, but other than that it was very much up to us to come up with a solution. We found a little deep-sea creature called ‘Tomopteris helgolandica’, which was only a couple of centimeters in length in reality. Our head modeler Magnus Eriksson did a great job at capturing the feel of this little creature and transformed it in to a five meter glowing beast. Senior animator Staffan Linder analyzed the movements and re-created a scaled up version of its fluid motion.”

FIDO also created various fish for their sequences, modeling in ZBrush and texturing in Maya. Says Lindahl: “We created a bespoke flocking system in Houdini, so that the animators only had to animate a leader for each shoal and the rest of the fish would follow in an intelligent way.” That kind of ingenuity was a key part of the production, and Lindahl adds that the film “was a fantastic experience working on a, by Scandinavian measures, huge production. It was produced on a budget that is close to 10% of what it would have been if it were a ‘Hollywood production’. Still, we managed to produce results that look a lot more expensive than they actually are.”

Gimpville travels to space, and back again

Gimpville from Norway produced 74 shots for the film, ranging from a lengthy pullback travel shot, to ocean sims, blue whales and environments.In one two minute (4,000 frames) visual effects shot, the audience witnesses a trip from day 51 to 101 of the expedition, that travels from the planet surface into space. “On this shot a lot of time was spent on the animatic,” says Gimpville visual effects supervisor Lars Erik Hansen, who also served as the overall effects supe and consultant in the film’s pre-production stage. “This scene was of high emotional importance for the story and it took a lot of tweaking to get the camera animation and timing approved. The camera was tracked in SynthEyes and modified in Maya, while the rest is Houdini only.”

The camera travels through CG clouds created by Gimpville. “We used Houdini’s volumetric CVEX shaders to create high-res clouds at render time, while using the same underlying shader setup to displace and display a volumetric proxy of the clouds in the viewport,” explains Gimpville Houdini artist Ole Geir Eidsheim. “We did have some stability issues since the whole scene was made in scale, and the early versions of the new Houdini 12 OGL viewport did not like that very much.”

“The ocean displacement was a shader with multiple Houdini Ocean Toolkit assets masked with fractal noises to avoid tiling patterns,” adds Eidsheim. “The outer blue atmosphere was added as a comp effect at a later stage to allow fine control according to reference images. Render times were definitely a big issue with so many frames. Since everything was set up to scale and so many elements was to be rendered with raytracing, there was a thin line between volume sampling and displacement quality in order to keep below the needed two hour limit per frame.”

The space background was an augmented image originally acquired from French photographer and reporter Serge Brunier, a noted specialist in depictions of astronomical subjects. “In the end, says Hansen, “the only thing not being 3D is the crew and some of the raft and the matte paint, and it took one guy (Christian Korhonen) three months to complete the 3D for the entire sequence.” Lead compositor Jan Svalland oversaw final comp’ing in Nuke with the sun lens flare created in After Effects with the Optical Flares plugin.

Gimpville also contributed CG ocean sims for a storm sequence outside the coast of South America. “We used the Houdini Ocean Toolkit originally released by Drew Whitehouse for the ocean displacement and rendering,” says Eidsheim. “A hallmark of HOT is its tiling nature (because the displacement effect is limited to a limited sized area), so we had to construct shaders with procedural noise textures that blended between 5-6 different HOT displacement with different values and seeds to get a natural looking ocean without visible tiling. Thanks to the flexible nature of Houdini’s VOP node toolkit, we set up an ocean displacement shader and made a geometry deformer that mirrors the settings of the ocean shader, thus be able to get visual feedback on how the surface would look at render time, while also be able to use it as a base for particle simulations. This way we could have a high-resolution grid displaced at render time, and a more manageable proxy to work on while doing simulations.”

Gimpville would set up a general scene that was about 200m by 200m, then rigged floating camera rigs and full particle systems. “We then attached tracked cameras to the floaters or manually placed cameras around in our scene to capture the best angles for a particular shot,” says Eidsheim. “While using this general scene worked fine for most of the shots, some proved to be particularly difficult due to either waves not behaving throughout the shot or secondary cached elements appearing wrong in camera. For these case we had to customize and create new scenes to make the effects work.”

Particles were a big part of the storm and for those, artists relied on data coming from HOT combined with custom geometry operations to define areas where particles would emit. “We also used the 3D volumetric tools to create and displace puffs of clouds flying through the air, simulating mist and fog whipped up by the wind,” says Eidsheim.

For the interaction between the raft and the ocean, Gimpville used a scale model of the raft and created geometry at the intersection point between the raft and the ocean proxy geometry. Explains Eidsheim: “This new geometry was then modified and fitted with attributes that allowed us to simulate splashes and foaming water around the raft while the waves hit it. In some of the shots, more complex splashes was needed in in the front, and for this we set up a FLIP fluid simulation where the raft crashed into water, or water crashed into the model. This was then further enhanced with spawning particles and meshed to give it a more water-y look than what you get by just using particles. For the lighting, we set up lightning lights that matched the sky plates and rendered these using light exports and AOVs so that comp could tweak and adjust the look, action and intensity post render.”

In another Gimpville sequence, the raft is surrounded by a pod of blue whales, signifying it has become one with nature. For the breaching whale water effects, the studio considered using Naiad, but SideFX had at the time improved its FLIP toolset in release V12. “We set up high-res flip simulations around each whale and surfaced this with Houdini’s Particle Fluid Surface SOP,” explains Eidsheim. “To get the simulated polygonal surface to blend with the rest of the ocean for perfect displacement rendering, we had to set up a system that straighten and flatten the outer areas of the surface since the Particle Fluid Cache has a hard time surfacing particles perfectly flat even if there is no movement in the particles or there is a very dense particle simulation.”

“We used COPs to map a black and white feathered texture based on the outer bounds of the actual surface geometry,” adds Eidsheim. “This texture was then combined with data measuring surface displacement and velocity in the shader and in SOP VOPs to act as a multiplier for the amount of ocean surface displacement. This way we was able to straighten out and control the outer edges of the simulated patch, seamlessly integrating the ripples around the whales with the rest of the ocean plane. On top of the surfaced particles we emit particles based on the contact between the whale and the surface and curvature and speed of the surface. We then transfer a procedural noise velocity texture to the particle source. The particle motion is primarily driven by initial velocity and the velocity field from the FLIP sim.”

Environments were also a feature of Gimpville’s Kon-Tiki contributions – they handled the plane flying through the clouds, the aircraft carrier and the harbor shots. “The carrier was modeled and textured in 3ds Max and Maya,” says Hansen, “while we used our in-house motion capture suite to animate the crowd with animation tweaking in Motion Builder and Softimage. All the elements was then imported to Houdini for simulation of flags, procedural animation of WW2 aircraft on deck, shading and rendering. The entire shot, including the ocean, is CG. The biggest challenge was to add enough detail to the aircraft carrier. It was hard to sell the scale of the model until we had enough geometry.”

For the Callao Naval Base shots, assets were again modeled in Maya and 3ds Max and imported into Houdini. “In Houdini, we simulated all the flags and banners using Houdini’s dynamic simulation tools and the Spring Surface Operator and made custom shaders for all the elements before rendering it as 16 bit EXR with light export passes using Physically Based Shading in Mantra,” outlines Hansen.

Shots of a Douglas DC-3 on its approach to Lima, Peru were previs’d, and then satellite images and DEM data online used to research the backgrounds required. “Eventually we bought a decent data set with 15 m resolution of an area north of Lima,” says Hansen. “We rendered the data sets in Mantra and stitched the renderings into a high-res panorama in Photoshop and used this as a base for further matte painting. The panorama and 3d camera from Maya was brought into Nuke and comped with the renderings of the airplane and clouds. After a lot of trial and error we finally found some aerial photos which matched the perspective of our render / matte painting. These images turned out to be a life saver for this particular shot.” The plane itself was a model purchased on Turbosquid.com, added to by Gimpville and shaded in Houdini.

Sharks!

Important Looking Pirates, a Swedish VFX outfit, worked on the visual effects for some of Kon-Tiki’s most memorable scenes – the encounter with white sharks. The CG creatures featured in 58 shots and were made of digital sharks, innards, blood, bubbles and water sims. ILP’s visual effects supervisor was Niklas Jacobson.In one shot, the crew’s pet parrot Lorita takes to the water and is attacked by the sharks. ILP made a CG replica of Lorita based on reference photography, sculpting the parrot in Maya and Mudbox and adding feathers and fur. The sharks were also created directly from reference in Maya, Mudbox and ZBrush. A close-up shark for hero shots of it being killed on the raft included extra detail (the principal photography relied on a shark dummy). The sharks were designed with several scars and teared fins “which showed that these beasts have lived a rough life,” says Kaupang.

“ILP did an awesome job on the infamous white shark sequences,” says Kaupang. “They were very hard to do. When you pull a shark on to land, it loses a lot of its mobility and range of motion. Especially a large shark. We studied hours and hours of reference footage from Discovery and National Geographic, but had to exaggerate the movements in the animation quite a lot to make the shots look menacing and entertaining. But we had to still keep it plausible and within what feels and is perceived realistic.”

The shots were filmed on the deck using witness cameras to help later with tracking. “Digi-doubles were created and match moved to the actors, and were used for casting shadows and reflections onto the digital raft floor and digital sharks. HDR images were captured on-set for CG lighting purposes and we shot reference images of chrome/gray spheres, used to properly orient and calibrate the HDR dome lighting/reflection images for each shot.”

To help sell the interaction, Kaupang suggested the actor pull back one of the shark’s fins with his leg when he sat down on its back. “It worked great!,” notes Kaupang. “In addition the raft’s floor was recreated in CG so we could feel the impact and weight of the shark bouncing around. CG debris was added on the floor and a CG cardboard box and tin can was added for even more interaction. All in all a lot of subtle details that really helped sell the shots, which took a lot of time and skill by talented artists to get right.”

ILP used Naiad for the water sims required in the shots, which were rendererd in V-Ray. “This allows us to get really quick results and feedback during the look development process,” says ILP’s Jacobson. “The base lighting was V-Ray domelight with a HDRI image that we shot on-set. We did use some extra keylights in order to get nicer kicks in the specularity and also to gain better control of the shadows that where cast from the shark on to the live action surrounding. The base shader for the shark was a vray-fast SSS shader which we layered together with a separate wetness shader in order to control the specularities and reflections. It varied over time since the shark was more wet just as they caught it, and it slowly dryed up over the following shots.”

“The rigging of the shark was all done in Maya,” adds Jacobson. “It was a fairly basic rig with additional blendshapes to control certain shapes of the shark. We did not choose to use any muscle system since we had relativity few shots. We thought we got better control and quicker results to brute force the animation on those scenes with softbody and some basic deformers. Another challenge was the floor of the raft under the shark. In order to convey the weight of the shark we had to replace the live action floor with a 3d floor in order catch the deformation. We had to carefully rotoscope our actors and do a lot of clean up work in order to remove the shadows from the actors onto the plate. We did matchmove work on the actors in order to re-construct their shadows cast onto the floor and shark. The scene with the actor sitting on top of the shark was also a very complex interaction shot. Since the rubber shark was very soft, the actors hands got buried deep down into the body of the shark. We needed to re-construct his hands in comp. In order to get fully visible hands during the entire action. Another challenge with this shot was that the rubber shark was smaller than the digital shark. We had to create a digital floor and lower it in order to fit our digital shark into the shot.”

ILP’s proprietary rendering engine, Tempest, was used for rendering particles and volumetric effects, including the blood. Compositing was carried out in Nuke.

Brewing a Storm

Norwegian effects house Storm Studios realized eight sequences for the film totaling 88 shots. This work included the opening sequence when Thor falls in the water, accomplished via a dry for wet shoot; CG establishers of Fatu Hiva Island; photo replacements of green cards; the New York sequence; the aircraft carrier interior featuring CG extensions; the Booby bird shots; the reef crash with ocean sims; and Raroia Island.The reef area was one of Storm’s most challenging set of shots, especially where the crew release the anchor. “For most of the above water shots we divided the water surface into patches, typically a patch inside the reef and one outside the reef,” explains Storm Studios visual effects supervisor Hege Berg. “The joints of these patches were usually hid under splashes or turbulent water. Some patches ended up being rather heavy Naiad simulations due to the area being close to camera and containing churning water, while others could do with a fairly simple displaced surface with just some extra splashes and foam added.”

Storm generated simpler water surfaces with the Houdini Ocean Toolkit. “If the shot required any additional interactions say between the water surface and the raft these would typically be simulated in Naiad and then integrated in comp,” says Berg. “All our water was rendered via Mantra using a shader written by our lead lighting TD.”

The studio also conducted an extensive practical shoot of foam and splashes at 4K in high speed. “Though some of it was used the problem we had with the element water was that we just could not get enough bulk,” admits Hege. “The big splashes that are generated from the vast forces of the ocean are almost opaque and with the elements we had shot we just could not get the right look despite extensive layering. We decided to generate a library of CG splashes instead for which we used Naiad to generate the sims and Mantra to render.”

“The compositors would thus map foam on top of the Houdini generated ocean surface and add splashes from our computer generated splash library,” adds Berg. “The foam was in some instances just stills of foam but for some of the shots it was necessary that the foam pattern interacted with the raft and moved in which case it we ended up generating a naiad sim for which we only rendered out the foam particles from a top camera.”

For one particular side-on shot of the raft on a large wave just before it hits the reef, Storm previs’d the scene first in Maya since no specific live action element had been filmed. “We were able to locate an element of the Kon-Tiki raft shot side on laying relatively still on a calm ocean so we stuck this on a card for our previs,” explains Berg. “Once we had a layout that directors were happy with we started work on creating the main wave using our ocean toolkit. Comp would take the work in progress renders of the wave and animate the raft. Thus the shot went back and fro between comp and fx a couple of times before we managed to get a wave-raft animation that utilized the little rocking motions of the raft plate.”

That gave Storm the main wave but they still needed the interactions of the bow plowing into the water. “We achieved this by setting up a Naiad sim on a flat ocean where we matched the speed of the raft to generate a more detailed ocean surface round the bow of the raft,” says Berg. “This sim was then subsequently deformed with the ocean toolkit to match the Houdini ocean surface. The foamy wake was a top down render of Naiad generated particles which the compositors used as a texture sequence to project onto the ocean using the UV coordinates of the ocean. They also added patches of drifting foam which was actually only still images of real foam shot by Aker Peer and Aker River.”

Using a library of Naiad splash sims rendered from five different camera angles, Storm added splashes around the raft and reef. “For the churning water around the reef we reused the Naiad sims we had generated for the close up shots of churning water only rendered with a camera matching the angle of this shot. The final integration of the splashes, churning water by the reef, calm inner water and the big outer wave was done in comp.”

The inner part of the reef was a textured plane that matched real coral and seaweed banks, with a calmer turquoise ocean surface added inside. “As a final touch,” notes Berg, “bespoke sims were done for close up splashes (the idea being that they would be generated from the camera boat) and droplets being left behind on the lens.”

An underwater shot of the raft on rocks relied on the water surface being split in two – a foreground patch that interacts and a churning background patch. “We did a Naiad simulation for the interaction but we were able to reuse one of our pre-existing naiad sim for the background patch,” says Berg. “The turbulent underwater elements, such as the debris, bubbles and smoke volumetric was all simulated in Houdini. Once we got the look the directors wanted the simulations themselves were fairly straightforward, we took advantage of the side on view and thus could simulate the particles in layers and comp used the zdepth of the reef to mask them out appropriately. The reef was sculpted in Zbrush and along with fx and the raft itself then rendered via Mantra.”

Storm’s New York cityscapes were mostly matte paintings rendered in Photoshop and comp’d in Nuke. “For the CG traffic we had some cars in plate that we could match to,” says Berg. “They were modelled and animated in Maya, and shaded and rendered with RenderMan. For this shot the client also asked for a large amount of atmospheric chimney smoke to illustrate that this was a cold winter day. At first we went out in Oslo with our own DSLR cameras to film chimney smoke around in the city, but at the end we realized that we couldn’t get the perspective and scaling right for all the chimneys, so we ended up rendering in Maya via RenderMan.”